All problems in computer science can be solved by another level of indirection.” — David Wheeler

In the previous articles, we have defined what serverless and FaaS actually mean, have looked at possible use cases, and examined a wide selection of FaaS platforms.

You are now fully informed, have a use case that fits serverless, have chosen your preferred FaaS platform and, optionally, some serverless services. Before you rush off to start building the next great product using serverless, there are some things you should keep in mind when it comes to serverless development.

Invisible servers

As a developer, whether your company deploys a serverless stack on premise or makes use of managed services and FaaS platforms from cloud providers, you still do not get access to the underlying servers. This requires a paradigmatic shift in the way you approach fundamental processes such as debugging, testing, and monitoring. Observability is achieved through external monitoring, logging, and tracing services. Unit tests still make up the majority of tests and most vendors offer some possibility of local testing: AWS SAM CLI, serverless-offline, Architect, etc. However, there should be a much stronger focus on integration tests than before. There is no good way to locally test all the interactions between your functions and the interfaces exposed by the external services you integrate with. Using service mocks is useful, but in the fast moving cloud environment with interfaces changing and new features being added all the time, it becomes strenuous to keep up (for more information on serverless testing see this article and this article). This paradigm shift does complicate things, however efforts are made for this new tooling and way-of-working to reach maturity as soon as possible. The serverless ecosystem is growing at a fast pace, with services such as Thundra, DashBird, and IOpipe for observability, TriggerMesh for multi-cloud management, Serverless Framework, Seed for CI/CD, and Stackery (see this picture for an overview of the serverless ecosystem).

No more monoliths

Unless you plan on bundling your entire application inside a single Lambda function, a monolithic architecture is no longer possible. Your architecture is now microservices/serverless oriented by default and event-driven architectures are a natural fit. This is not to say that monoliths are a bad design choice, but simply that when using serverless, it is necessary to think in terms of multiple components connected over an unreliable network.

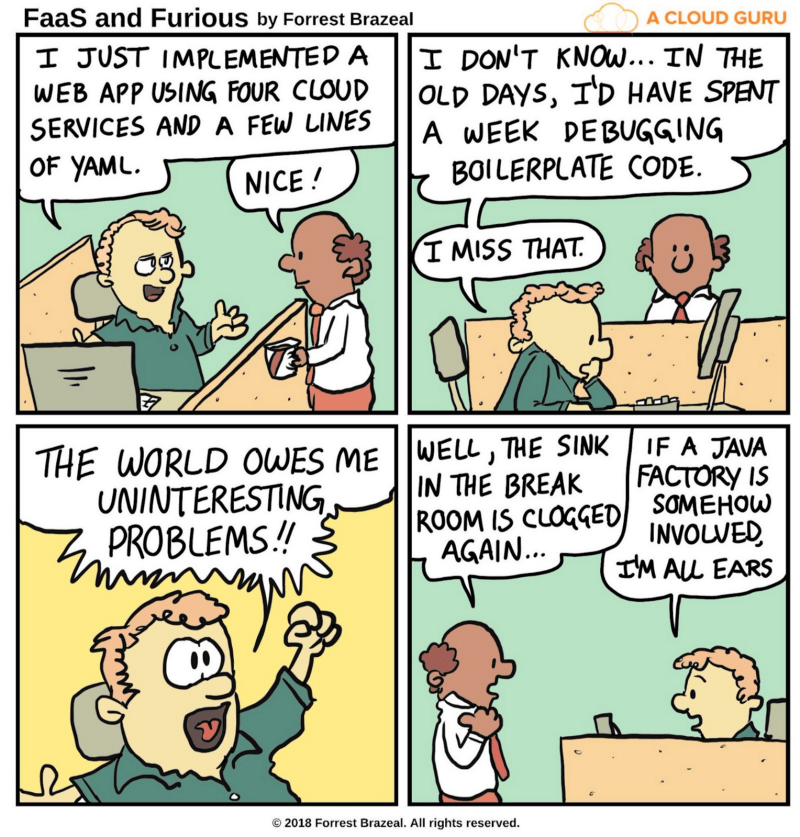

Less boilerplate code

When services such as EC2, GCE, and Azure Virtual Machines were introduced, a clear benefit was offloading part of the operational responsibility to the cloud provider. Managed container orchestration services such as GKE, EKS and AKS took this one step further. Serverless takes this to the next level, as the entire operational side becomes the responsibility of the provider. You supply the code and the provider ensures it runs whenever needed, while taking care of concerns such as provisioning, scaling, and patching. Serverless greatly reduces the amount of boilerplate code you have to write, allowing you to operate at a higher abstraction level that hides from you the OS, runtime, and even container management and orchestration.

Fine-grained security

The FaaS model lends itself well to the single responsibility principle, with each function performing a specific task. This has benefits for security, as each function only gets the minimal permissions required to fulfill its purpose, thus significantly reducing the attack surface for hackers. Moreover, since patching is handled by the cloud provider, you have one less thing to worry about when a new vulnerability is discovered.

Cold starts

Serverless development comes with its particular set of peculiarities and an essential one to get used to is the cold start. Since FaaS platforms run your code on-demand, when a request first hits a function the platform goes through the process of provisioning a function instance (an execution environment for your function — usually a container), injecting the runtime and the dependencies, and then finally executing the function. This is called a cold start. Cloud providers usually keep previously created function instances around for a limited time and route any new requests to them. Such executions are usually called warm starts. There is a substantial time difference between a cold start and a warm start, influenced by factors such as the number and size of the dependencies, runtime used, and the time it takes to build a container.

Depending on the usage patterns of your application, you will have a higher or lower number of cold starts, which in turn will directly impact the response time. The simplest strategy to mitigate cold starts is called function pinging, and implies periodically calling your functions to ensure they stay warm. Both managed and open-source FaaS platforms employ a variety of techniques for mitigating cold starts, such as container pre-warming (keeping pre-built containers around that can be used when new function requests come in). For a detailed explanation regarding cold starts and mitigation techniques, see this excellent article.

It is important to keep in mind that you have much more control over mitigation techniques if you use an open-source FaaS platform, as managed FaaS platforms do not expose any controls for cold start mitigation.

Entering the scene: distributed systems theory

Everyone that has transitioned from monoliths to microservices has had to quickly become aware of the common fallacies people make when dealing with distributed systems (for a detailed explanation, see this article):

1. The network is reliable.

2. Latency is zero.

3. Bandwidth is infinite.

4. The network is secure.

5. Topology doesn’t change.

6. There is one administrator.

7. Transport cost is zero.

8. The network is homogeneous.

A failure to understand that assumptions made in monolithic architectures no longer apply to distributed systems can lead to trouble down the road. Awareness of distributed systems issues, such as network bandwidth and latency limitations, failure modes, consistency models, consensus, and idempotency is crucial. Though it allows you to operate at a higher abstraction level than microservices and masks some of these concerns, serverless still very much implies distributed systems with all the benefits and drawbacks.

Functions are stateless, meaning one execution of a function is completely independent from another; you should assume that no data will survive between executions. In practice, most providers reuse the container after a function execution has ended, therefore data might still be available from a previous execution. This means you can still cache generic things such as service clients and database connections. However, you have no control over which container your next function execution will run in, or when the provider might decide to scrap a container. To maintain specific state when using Function-as-a-Service platforms, you have to rely on an external storage system, such as S3 or DynamoDB. When choosing the storage system, you should be aware of the latency and throughput requirements of your service.

Another important issue to keep in mind is that functions should be idempotent, meaning that repeated executions of a function with the same input parameters should yield the same result. Since a function execution might fail or timeout at any time, most providers include an automatic retrying mechanism, but sometimes you will have to write your own. If your functions have side effects, retrying failed executions might have unintended consequences. Supposing that a function execution had a side effect before failing (such as writing a record to a database), then when it is retried that side effect will happen again, leading to unexpected behaviour (e.g. if the function writes a record to a database and then fails, when it is retried it will write a duplicate record). There are many ways to deal with this issue, and you can find a good tutorial here.

If you plan on composing multiple functions and services to form a workflow (which is often the case), dealing with the complexity of handling statelessness, failures, retries, etc. might be too much to handle. In this case, you should consider using function workflow orchestrators such as Fission Workflows, AWS Step Functions or Azure Durable Functions. These systems simplify function composition and provide some features such as fault tolerance out-of-the-box.

Heavy traditional frameworks might not be a good fit

Some characteristics of serverless computing are in direct opposition with the previous monolithic paradigm (and to a much lesser degree, with microservices). Heavy frameworks, such as Spring for Java developers, are not a good fit with stateless, short running functions, which have limited memory and their cost tied directly to allocated memory.

Most providers have a deployment package limit of tens to a few hundred MBs. The size of the deployment package greatly impacts the function deployment time, since it has to be transferred over the network to its designated container. The time it takes for the framework to initialize coupled with the time it takes for the deployment package to be transferred over the network directly impacts the cold start time of a function. This leads to two problems:

1. The response time of your application can be high, depending on the traffic, and thus directly impacts the user experience.

2. You are charged for the extra time the function takes to execute, since the cost model of FaaS is pay-per-use

A heavy framework also has a higher memory footprint. Most FaaS providers increase the cost with higher allocated memory per function, therefore your costs will increase.

Granular, pay-per-use billing and its implications

The pricing model introduced by serverless is a game changer. Not having to pay for idle time allows you to approach software development and deployment differently.

First, it is now economically sensible to have many environments available for different phases of the development lifecycle. Since you only pay for actual usage, you can create as many development, staging, and acceptance environments as you want. Traditionally, non-production environments would be stripped down versions of the production environment to reduce costs. With serverless, they can be identical to the production environment without incurring extra infrastructure costs. Disclaimer: this does not apply to self-hosted FaaS platforms.

Second, this pricing model allows you to run as many A/B tests as you want without impacting the cost. Since you are only paying for what is actually used, you end up splitting the same cost over multiple versions of your application. This makes running frequent experiments affordable to small businesses too, not just to industry behemoths, such as Amazon, Google, and Facebook.

Finally, a granular, pay-per-use billing model gives you much more insight into the profitability of individual features. Cloud providers are charging you for your usage and they quantify that using metrics, such as number of requests or memory used by a function. Since that information is also made available to you, it is possible to determine exactly how much a feature is costing you to operate or whether you are generating a profit or a loss from different clients.

For this section, I have borrowed insights from Gojko Adzic, who has written extensively on serverless. If you want to learn more about serverless, I would strongly recommend his latest book: Running Serverless: Introduction to AWS Lambda and the Serverless Application Model.

(Some) Lock-in is inevitable

People wanting to paint serverless in a negative light often mention vendor lock-in as a major concern, some going as far as calling it “one of the worst forms of proprietary lock-in we’ve ever seen in the history of humanity”. However, things are moving fast in the serverless world and FaaS platforms offered by large cloud providers, such as AWS Lambda and Azure Functions are not the only ones around anymore. There are many production-ready open-source alternatives, such as Apache OpenWhisk and Fission (for a detailed comparison of FaaS platforms, see my previous article). Most of them can run on top of a container orchestrator such as Kubernetes, which you can either get as a managed service (EKS, GKE, AKS, etc.) or deploy it yourself on cloud infrastructure or even on-premises.

Therefore, if you want the least amount of lock-in, you can deploy an open-source FaaS platform on your own infrastructure. The community is working hard towards facilitating multi-cloud deployments. There are a variety of frameworks designed to simplify the process, such as Serverless Framework, TriggerMesh, and Zappa. The Cloud Native Computing Foundation (CNCF) is also working towards serverless standards with projects such as CloudEvents, which provides an open standard for describing events.

All things considered, serverless by itself does not imply any more lock-in than any previous technology or framework. If you so wish, you can write abstractions over the FaaS platform and managed services and end up with a true multi-cloud solution. However, that will involve a great deal of development effort and will limit you to the lowest common denominator for all the cloud providers you want to support. What serverless does offer you is a better deal if you do decide to accept some lock-in. You can take advantage of provider-specific features, benefit from out-of-the-box security and compliance, and leverage easy integration with various managed services and event sources.

Conclusion

At its core, serverless development requires a change in mindset. Microservices required a fundamental shift in our development practices; these practices should be further revised and refined with the emergence of serverless computing. The purpose of new technologies has always been to support and empower great business ideas. Serverless offers a clear path towards delivering business value, abstracting away operational concerns, and greatly decreasing time-to-market. For developers, it means less focus on the underlying infrastructure and operational concerns, but also more focus on distributed systems and ecosystems.

Developers should embrace the faster prototyping abilities and aim for shorter, fine-grained iterations. Serverless brings greatly lowered risk when it comes to developing new features; fine-grained pay-per-use and delegation of operational concerns allow developers to safely test out even the most far-fetched ideas; the cost is minimal in case of failure. Developers should also be aware, but not afraid, of the immature tooling that is inevitable given the recency of the technology. There is much opportunity to define best practices, build new tooling, and help shape the development of serverless computing.