“All problems in computer science can be solved by another level of indirection.” — David Wheeler

In the previous article we introduced serverless computing, a natural evolution of cloud computing, filling the gaps left by established concepts such as IaaS, PaaS, and SaaS.

We saw that it brings the promise of fully managed infrastructure, true pay-as-you-go billing, fine-grained auto-scaling, and many more. In this article, I want to address more practical matters:

How are companies and institutions leveraging serverless technologies to deliver more value?

There are many perks to serverless, but as we have often seen in the IT industry, there is no silver bullet. Therefore, we must consider use cases that can employ serverless to great benefit, as well as use cases where serverless would do more harm than good.

Where serverless shines

Serverless brings a unique set of features to the table, giving you the precision of a scalpel in situations where you were previously forced to hack your way through with a machete. On the one hand, its event-driven approach will serve you well whenever you have periodical jobs to run or want to integrate with other services in an asynchronous manner (e.g. using webhooks or message queues). On the other hand, its fine-grained, near-instant scalability is a perfect fit for applications that are highly parallelizable and/or have high traffic variability. Coupled with fully-managed infrastructure and a fine-grained cost model, what you get is a highly-attractive package for a variety of situations. In this section, we will explore use cases that are well-suited to serverless.

Displacing web servers

Implementing web backends and APIs using serverless components provides clear benefits. Using a FaaS platform like AWS Lambda behind an API gateway, along with an object store like S3 for the frontend code is all you need to get started. You get seamless auto-scaling that adjusts to your traffic patterns out-of-the-box and infrastructure management is no longer a concern. Even better, you no longer have to pay for over-provisioned VMs during periods of low activity and you no longer have to keep a team on-call whenever you are anticipating traffic surges. Serverless makes it easy to build feature-rich web applications by facilitating plug-and-play architectures. Functionality you would end up having to repeatedly implement , like authentication and credit card payments, can be delegated to highly reliable third party services, such as Auth0 and Stripe. This allows your codebase to remain compact and focused mostly on the business logic.

Bustle, a media company, operates a platform with over 50 million monthly readers. Their website leverages a combination of Lambda, API Gateway, and other services. High volumes of metrics data are processed in real time using AWS Lambda and Kinesis Streams. They have managed to cut their costs almost in half by using serverless technologies.

Coca-Cola is transitioning its infrastructure to the cloud and has successfully employed serverless to achieve 65% in cost savings. They have adapted the communication system that connects all their vending machines to use Lambda and API Gateway. This communication system is used by the service team to get notifications in case of low stock or defects. It is also used by the marketing team to deploy campaigns and to handle payments when users buy a beverage. Instead of running VMs that mostly sit idle — along with all the essential infrastructure required to provide services, such as load balancing and security — Coca-Cola employs an event-driven serverless architecture that only runs when necessary. One key take-away from their experience is that serverless can help gracefully scale-down systems as they approach end-of-life. At the tail end, cost savings can be as high as 99%

The list goes on, with companies such as Codepen, a popular code sharing platform, serving 200.000 requests/hour with a single DevOps engineer and MindMupservicing 400.000 monthly users with a 100$ monthly AWS bill. More details on these case studies and serverless in general can be found in this excellent book.

Stream and batch processing

Big data and serverless are a natural fit. Serverless offers massive scalability and its event-driven focus is well-suited for both batch and real-time processing. The days of managing large, costly Hadoop or Spark clusters, running around-the-clock whether they are needed or not, are gone. A full-fledged data processing pipeline can be built using FaaS as compute, along with other serverless services such as AWS Kinesis or Google Cloud Pub/Sub.

FaaS is perfect for scheduling batch jobs like report generation and automatic backups. A function can be configured to run based on a specific schedule and launch a new job. Alternatively, a serverless system can be configured to respond whenever new data is added to the storage layer, enabling real-time data analytics and transformation.

Complex data processing workflows can be easily implemented by composing functions.There are multiple solutions available, such as Fission Workflows, IBM Composer, and AWS Step Functions. It is possible to make use of fully-managed services like AWS Glue to define event-driven ETL pipelines, analyze log data, and perform queries against data lakes. Other options for serverless stream and batch processing are services such as Google Cloud Dataflow and Azure Data Factory.

Jammp is a marketing platform using data analytics and machine learning to provide user acquisition and retargeting services. Working with clients such as Twitter and Yelp, and processing 75 terabytes of data daily, the company had to deal with exponential growth as their services increased in popularity. Their initial solution, using EC2 instances and Kafka, quickly crumbled under the pressure of dealing with millions of extra events coming from newly acquired clients. They used a combination of FaaS, serverless stream processing systems, and serverless databases that allowed them to scale to 250 times the number of events that they processed on the old platform.

Thomson Reuters leveraged serverless to build a data analytics pipeline with the purpose of improving user experience across their offerings. Data is loaded from Kinesis and processed using Lambda, before finally being stored in S3. In parallel, a real-time pipeline delivers the same data to an Elasticsearch cluster. Thomson Reuters chose serverless for a few key reasons: elastic scaling in the face of unpredictable traffic (which could double or triple during specific times), fully-managed services that require minimal administration, and quick time-to-market (the development time was set at 5 months).

Media transformations

Multimedia processing is often CPU-intensive, implies a bursty workload and is highly parallelizable, making serverless a perfect match. Such workloads are generally ill-suited for traditional application servers. As their computing power is fixed, it is necessary to either overprovision or risk overloading the servers when bursty tasks are running. It is also difficult to support the level of parallelism that these tasks could benefit from. With FaaS, you can perform tasks such as image resizing, thumbnail generation, video encoding, and many other transformations on-the-fly. Jobs can be broken down into tasks that fit into single functions and thousands of such functions can be run in parallel, resulting in great speedup.

Netflix has to deal with thousands of video uploads from studios daily, all of which have to be processed before they are ready for streaming. Instead of inefficiently polling S3 (the blob storage they use) for new uploads, they leverage Lambda to build an event-driven pipeline. Whenever new files are uploaded, events are generated, triggering Lambda functions that split the videos into 5-minute blocks before passing them to an encoding farm.

Taking this a step further, researchers from Stanford and University of California developed ExCamera, a system that performs video encoding using FaaS as compute. ExCamera can spawn thousands of functions, each processing a small chunk of the entire video. Compared to commercial systems, such as Google’s vpxenc and Youtube’s encoder, it is able to achieve much higher levels of parallelism, leading to lower encoding duration. For example, given a 15 minute, 4K animated movie, ExCamera encoded it 60x faster than a 128-core machine using vpxenc.

SiteSpirit, a Dutch company specializing in CRMs, back office and image management, saw a 10 times speed increase at a 90% lower cost when using OpenWhisk (a FaaS platform initially developed by IBM, then donated to Apache) to perform image processing for their clients.

Internet-of-Things

When it comes to IoT applications, sensors are usually the driving factor. Such applications exhibit a reactive behaviour, with different flows of execution triggered by sensor readings exceeding or dipping below certain thresholds. Most of the time, readings are stable and minimal to no action is required; in case of a critical event such as a traffic jam or a natural disaster, however, the system will be hit by a barrage of data, requiring near-instant scalability. This is difficult to do using a traditional architecture, but a natural consequence of using serverless architectures. In addition, IoT systems are often cobbled together from a variety of devices, each using their own protocols; they require custom solutions to perform tasks like data aggregation and data transformation. As discussed earlier in this article, serverless is a natural fit for data processing pipelines.

Edge computing

Edge computing is an emerging field that can greatly benefit from leveraging serverless computing. This new paradigm is all about pushing the computation as close to the end-users as possible, whether the end-users are actual people or IoT devices. This greatly reduces perceived latency for the users and is essential for low-latency applications. Using FaaS, computing power can be sent directly to the data in the form of functions, eliminating the need for round-trips to the datacenters hosting the applications. A great example of a commercial system is Lamba@Edge. Requests can be served directly at the edge, and processing can be performed locally (e.g. machine learning tasks, such as image recognition, can be performed directly on the recording devices). With services such as AWS Greengrass, this local execution capability allows groups of connected devices to keep functioning normally in the case of poor connectivity to the cloud or network failures.

Where serverless might not be the best fit

The same scalpel-like precision that makes serverless so attractive for specific use-cases turns out to be a disadvantage in other situations. The downside of a highly-specialized tool is that it comes with strict limitations; if an application has requirements that conflict with these limitations, you would be better off seeking a different solution rather than trying to shoehorn your system into using serverless.

Cost model

Serverless has a fine-grained cost model that works great when your workload varies with time. However, FaaS platforms such as Lambda are more expensive per millisecond of computation than an equivalent VM. If your workload always keeps your system busy, with hundreds or even thousands of concurrent function invocations at any point in time, FaaS will most likely be more expensive than running your own VMs. If you have systems that deal with high traffic all the time, you might want to stick to dedicated servers for the time being. Keep in mind, even though FaaS is more expensive to run continuously, it also reduces maintenance and payroll costs.

Resource limits

Most FaaS platforms limit the maximum memory allocated to a single function to a few GBs, the execution time to around 5–15 minutes, and the maximum amount of temporary disk space to a few hundred MBs. If your tasks cannot be split into pieces that can be handled by multiple functions, FaaS is not a good fit for you. You might have tasks that require handling large files on disk, tasks that do not fit into the memory available to a single function, or tasks that simply cannot be easily parallelized. In these cases, a fleet of VMs would be a better fit. Although for most cases you can find a workaround and still use serverless, there is no point in using the wrong tool for the job. If you are still intent on moving away from managing VMs, remember, there is more to serverless than FaaS. It might be a good idea to look into a managed service that suits your use case.

Low latency applications

Functions are stateless, therefore they rely on remote memory to load the data required for each task. Whenever a function is invoked, it first needs a container in which to run. If no such container is available, the platform constructs a fresh one and initializes it with all the dependencies required to run the function. This process is called a cold start, and depending on the function code, programming language used, and dependencies required, it can take between a few hundred milliseconds and a few seconds. If your application has exceedingly low latency requirements, FaaS might not be a good fit. As network throughput and reliability continues to improve and ways are found to mitigate cold starts, such use cases might become the norm. For low-latency applications, open-source platforms are your best bet, as many of them are starting to provide ways to mitigate cold starts, such as container pre-warming. If you would still prefer a managed FaaS platform that is focused on performance and low latency, have a look at Nuclio. For a detailed discussion on optimization techniques employed by FaaS platforms to reduce cold starts, I recommend reading this article.

Tight deadlines

Using serverless requires a different way of thinking than most developers and software architects are used to. It involves a paradigmatic shift at the company level that cannot happen overnight. When it comes to projects with tight deadlines, it is never recommended to use a technology that the team is not familiar with. As people prototype with serverless and accumulate more experience, they will naturally see when a project is a good fit for serverless. The transition to serverless should not be forced, nor should this paradigm be used just because of all the hype surrounding it right now.

Conclusion

We have seen that serverless is a powerful tool to add to one’s arsenal. This new paradigm already has a substantial list of use cases, and time will only add more. Like any good tool, it cannot be leveraged in any and all situations, but requires careful consideration from its wielders.

Many might consider the main strength of serverless to be its potential for cost savings, but I would argue that the main strength of serverless is its strong focus on providing business value. Not all organizations utilizing serveless have seen cost savings. However, they have benefited from a much faster time-to-market and a freedom to focus on providing value.

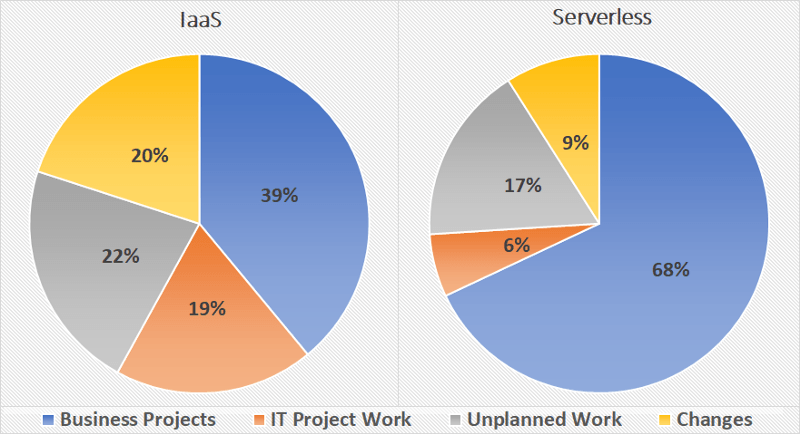

By abstracting away the infrastructure and providing a fine-grained cost model, serverless shifts time from operations to development and from unplanned work to business projects. Organizations are more comfortable attempting new projects with serverless, since the upfront cost is minimal, while prototyping is both simple and quick. In this fast paced world, companies can no longer afford development cycles measured in months and years.

Coca-Cola measured where they spent their time when using IaaS compared to serverless, and this is what they found:

Serverless allows organizations to provide more business value. There is no need to deal with server-specific chores: provisioning, patching, licensing, upgrading, over- and under-utilization, etc. Operations are minimized and much of the boilerplate is already dealt with, allowing developers to focus on the business logic.

Serverless ties your operational costs to your success. As you only pay when services are being used, a new product which sees little use can keep operating with minimal expenses. When faced with exponential growth, the increase in operational costs is mitigated by the increase in profits.

In the next article, we will delve into the serverless ecosystem and compare the main cloud providers and their serverless offerings, along with some of the many open source platforms available.